Is my dog conscious?

On the tip of my tongue

Say these words out loud.

‘The tip of the tongue, the lips and the teeth.’

Whilst you were speaking, what were your tongue and lips doing? How were you breathing? Can you breathe in and still speak?

Now try reciting this (rather peculiar) poem. It contains every sound (phoneme) used in spoken English.

The pleasure of Shawn’s company

Is what I most enjoy.

He put a tack on Ms. Yancey’s chair

When she called him a horrible boy.

At the end of the month he was flinging two kittens

Across the width of the room.

I count on his schemes to show me a way now

Of getting away from my gloom.

This ‘panphonic’ poem was written by linguist Neal Whitman, and used in the film Mission: Impossible 3 (2006).

Did you notice that whilst you were talking, your tongue and lips never moved sideways?

Reaching out across a divide; Israeli Prime Minister Yitzhak Rabin, U.S. president Bill Clinton, and PLO chairman Yasser Arafat at the White house in 1993 (Image: Wikimedia Commons)

Talking connects us across all cultural boundaries, and sets us apart from other animals. Our diverse vocal repertoire, contributing to nearly 7,000 languages worldwide, has no equivalent anywhere in the animal kingdom. We arrange complex sounds into phrases with rhythm, stress and intonation (prosody), and deliver them with visual emphasis using facial expressions and gestures.

Traditionally, linguists have considered that our speech is too complex to have arisen by natural selection, suggesting instead that it results from a sudden event such as a ‘freak’ genetic mutation.

This view is at odds with what we understand about the rest of our biology. Evolution works by selecting from existing variation in forms and behaviours. Adaptations are not a ‘best-design’ solution to a survival problem, but a balance of innovations that arrive with inherited constraints.

During our first seven years of life we learn to articulate the sounds unique to the language(s) we hear. We structure these sounds into syllables, and use them to build words, phrases and sentences that convey meaning. We also use these sounds to coin new words and invent new meanings for these sounds. For our ancestors to begin to do this, however, requires that the physical ability to make this diversity of sounds already to have been in place.

How do we physically produce speech sounds?

![X-rays of a human jaw, taken by Dr H. Trevelyan George at St. Bartholomew's Hospital, London, in January, 1917. The black dashed line in these x-rays is a thin metal chain on the tongue’s upper surface. The pictures reveal how it changes position when producing the ‘cardinal’ vowels [i, u, a, ɑ]. These sounds are voiced by controlling the flow of breath and causing vibrations in the vocal cords. English consonants are voiced or voiceless, and are made using five main movements. We touch the lips together or against the teeth, place the tongue between or onto the back of the teeth, or against the hard palate, and lift the tongue against the soft palate. We also use an open-mouthed turbulent flow of air to make aspirated sounds as in the ‘h’ of ‘hair’ (Image: Wikimedia Commons)](http://www.42evolution.org/wp-content/uploads/2014/09/E1-400x392.jpg)

X-rays of a human jaw, taken by Dr H. Trevelyan George at St. Bartholomew’s Hospital, London, in January, 1917. The black dashed line in these x-rays is a thin metal chain on the tongue’s upper surface. The pict... moreures reveal how it changes position when producing the ‘cardinal’ vowels [i, u, a, ɑ]. These sounds are voiced by controlling the flow of breath and causing vibrations in the vocal cords. English consonants are voiced or voiceless, and are made using five main movements. We touch the lips together or against the teeth, place the tongue between or onto the back of the teeth, or against the hard palate, and lift the tongue against the soft palate. We also use an open-mouthed turbulent flow of air to make aspirated sounds as in the ‘h’ of ‘hair’ (Image: Wikimedia Commons)

Sound generation (phonation) in almost all mammals involves coordinating their breathing with the control of tension in the vocal folds of the larynx. We selectively process the harmonics from these basic sounds, and articulate precise sound sequences using rapid and rhythmic movements of the tongue, lips and associated structures.

At the simplest level, speaking involves alternating open-closed movements of the jaw at the same time as generating sound in the larynx. This produces an alternating stream of open (resonant) and closed (muted) sounds. We build words and phrases from these alternating ‘segments’, using the lips and tongue to produce precisely articulated consonants and control pitch, timbre, tone and stress.

We vocalise as other mammals do, using our feeding and breathing apparatus. The muscle movements that operate both chewing and speaking are controlled by rhythmic nerve impulses from ‘Central Pattern Generators’. These are autonomous nerve ‘modules’ in the lower brain and spinal cord. They co-ordinate all our repetitive movements, from walking to vomiting.

Which aspects of our speaking abilities are found in other animals?

Jack, a military working dog, barking during his training; Rochester, New York, 2009. Dogs are unusual amongst vocal mammals; their calls include barking sequences (bow-wow-wow) alternate between mostly identical open-c... morelose jaw movements. In humans, this alternation is a universal speaking mode. The dog barking in this video is also making other coupled rhythmic communication signals – note the tail wagging and ear movements (Image: Wikimedia Commons)

There is no animal equivalent to the combined movements that make up our vocal cycle, although other animals make all of these movements. Most mammals call with their mouths open, using coupled Central Pattern Generators that link the out-breath with sound production (phonation) in the larynx. A few animals such as dogs make occasional calls using a partial open-close oscillating jaw movement, although they typically repeat the same sound (bow-wow-wow). We coordinate the circuits for breath control and phonation with another set of pattern generators that operate the rhythmic movements of our jaw, lips and tongue.

These movements have other functions, as in the suckling of newborns. This ability defines us as mammals. But these movements may also be linked to talking. James Lund and co-workers suggest that the human Central Pattern Generators controlling chewing, licking and sucking also participate in speech.

Peter MacNeilage goes on to suggest that the rhythmic repetitive movements used for eating have been coordinated in mammals since the clade arose some 200 Ma ago, and that they lie at the root of our articulations skills. As we speak, our tongue moves up and down, and front to back in the mouth. Chewing also includes sideways motions of the tongue and jaw. These are not included in our vocal movements, indeed they would leave us more prone to biting our tongue. MacNeilage proposes that coupling our pre-existing capability for making vocal calls with this subset of movements used during eating, gave our more immediate ancestors the capacity to articulate simple ‘proto-syllables’.

Baby chimpanzee (Pan troglodytes) at Beijing zoo. Chimpanzees can be taught to recognise several hundred human words, but none have reproduced these verbally, even if raised in a human environment. Chimpanzees make occa... moresional calls in a series (something like syllables in speech), although repetition of one sound is more usual. In the variable-sound calls, the arrangement of components do not seem to be significant. In contrast, we can say e.g. ‘cat, ‘tack’ and ‘act, using varied sequences of the same sounds to infer different information (Image: Wikimedia Commons)

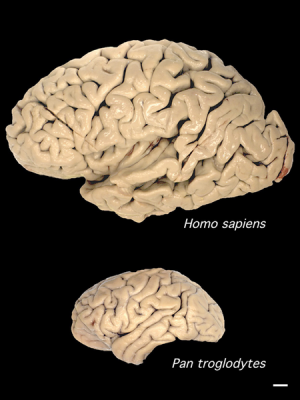

Does this then apply to our nearest relatives, the chimps? Philip Lieberman has shown that their vocal apparatus is anatomically suitable to produce a range of human syllables, and yet they do not speak. This suggests that they cannot coordinate their Central Pattern Generator signals for vocal sound production and chewing. The reason for this appears to be linked to differences in cognition. Recent work comparing the neurology underpinning chimp calls and human word-based speech has found that the neural circuits driving these respective vocalisations originate from different parts of the brain.

However chimps and other primates do make rhythmical face and jaw movements, producing lipsmacks, tongue smacks, teeth-chatters and other facial gestures. Lipsmacks involve moving the jaw without the teeth coming together, as in human speech, and are often made by juveniles as they approach their mother to suckle. Primate grooming is a 1:1 interaction using touch, eye contact and other positive one-to-one interactions which often involve ‘taking turns’.

We are able to precisely control the pitch, and resonance as well as the rhythm of speech and articulation of our sounds thanks to our flexible and dextrous tongue. The tongues of new-born babies lie flat in their mouths. The permanent descent of our larynx occurs early during our development; this raises the tongue in the mouth, allowing it to move freely.

Male Koala (Phascolarctos cinereus) at Billabong Koala and Aussie Wildlife Park, Port Macquarie, New South Wales, Australia. Almost all mammals lower their larynx to vocalise. Koalas are unusual; along with lions, deer ... moreand humans they have a permanently descended larynx. In these non-human animals this results in a dramatically resonant deep male mating call. Unlike us, these other animals have pronounced differences in body form between males and females (Image: Wikimedia Commons). Watch a video of male koala vocalisation here.

The ability to learn and reproduce complex sounds in the form of song has arisen independently in whales, humans, and at least three times in birds. Only male songbirds make complex learned song calls; they sing to attract mates. In contrast our language ability is gender-balanced. No other primates have elaborated male mating calls. However the second descent of the larynx in boys during puberty suggests that sexual selection may have refined our control of the resonant qualities of our vocal tract.

How could natural selection have favoured our ancestors’ ability to produce these sounds?

Our vocal flexibility comes at a price; an increased individual risk of choking. Our ancestors’ ability to form ‘proto-words’ and ‘proto-song’ must have given their tribal group a selective advantage that outweighed this risk.

Strong bonds bring advantages to social groups including relative safety in numbers from predators, collective understanding of their environment, higher levels of parental care from the extended family (resulting in better juvenile survival), and coordination of hunting and foraging. Primates use grooming, which combines touch with emotionally-coded facial and vocal sound gestures, to make and maintain social bonds.

Chimpanzees (Pan trogoldytes) grooming at Gombe Stream National Park, Tanzania.Grooming in primates cleans the outer body, decreases stress, allows acceptance into the group and reveals the social hierarchy. Chimps and ... moreother higher primates may utter pleasure-indicating sounds during grooming, particularly if being attended to by a higher status member of the tribe. Chimpanzee tribes range from 15 to 120 individuals. In their “fission-fusion society” all members know each other but feed, travel, and sleep in smaller groups of six or less. The membership of these small groups changes frequently (Image: Wikimedia Commons)

Robin Dunbar points out that the extent of primate grooming time can be predicted from their combined neocortex (‘thinking’ brain) size and social group size. It is thought our large-brained hominid ancestors lived in tribes of up to around 150 individuals, which would mean that they would need to spend up to 40% of their time manually grooming each other to maintain social bonds. Speech may have provided a time-saving alternative; a means of ‘vocally grooming’ others. A speaker may have been able to connect to and bond simultaneously with multiple individuals.

Human babies making new or unusual sounds quickly receive their parents’ attention. Ulrike Griebel and Kimbrough Oller suggest that our hominin ancestors’ babies may have produced sounds that provoked more parental attention and so more effective bonding. Their better survival would select for babies with vocal variation and flexibility.

A large pod of over 80 dusky dolphins (Lagenorhynchus obscurus) swimming together in South Bay, Kaikoura, New Zealand. Although there is some physical touching amongst individuals in the pod, dolphins and killer whales ... moreuse vocal grooming to coordinate with each other when hunting, during migrations, and ‘play’ (Image: Wikimedia Commons)

Involuntary pleasure sounds encourage continued social interactions between primates of all ages. Perhaps our hominid ancestors learned to make diverse and pleasurable sounds as babies, and as a result were better equipped as adults to vocally groom their extended families.

Conclusions

- Fine motor control of the tongue, lips and jaw allow us to produce a huge repertoire of diverse sounds. This control likely comes from combining the movements used for eating with the production of vocal sounds.

- Our ancestors may have evolved this capability at a time when their social group size increased, with a more time-efficient form of grooming required if the cohesion of the ‘tribe’ was to be maintained.

- Selection for diverse and flexible speech sounds may have begun with babies using suckling and other sounds to gain more parental attention. These individuals would be more effective as adults at ‘vocally grooming’ their wider social group.

- Our ability to produce speech sounds is balanced between genders, suggesting that group selection rather than sexual selection has driven the evolution of this ability.

Text copyright © 2015 Mags Leighton. All rights reserved.

References

Arbib, M.A. (2005) From monkey-like action recognition to human language: an evolutionary framework for neurolinguistics. Behavioral and Brain Sciences 28, 105-124.

Bouchet, H. et al. (2013) Social complexity parallels vocal complexity: a comparison of three non-human primate species. Frontiers in Psychology 4, article 390.

Charlton, B. et al. (2011) Perception of male caller identity in koalas (Phascolarctos cinereus): acoustic analysis and playback experiments. PLoS ONE 6, e20329.

Fitch, W.T. (2010) The Evolution of Language. Cambridge University Press.

Green, S. and Marler, P. (1979) The analysis of animal communication. In Social Behavior and Communication (P. Marler, ed.) pp. 73-158. Springer.

Hauser, M. et al. (2002) The faculty of language: what is it, who has it, and how did it evolve? Science298, 1569-1579.

Kimbrough Oller, D. and Griebel, U. (eds) (2008) Evolution of Communicative Flexibility: Complexity, Creativity, and Adaptability in Human and Animal Communication. Vienna Series in Theoretical Biology, MIT Press.

Lehman, J., Korstjens, A.H. and Dunbar, R.I.M. (2007) Group size, grooming and social cohesion in primates. Animal Behaviour 74, 1617-1629.

Lieberman, P. (2006) Toward an Evolutionary Biology of Language. Harvard University Press.

Lund, J.P. and Kolta, A. (2006) Brainstem circuits that control mastication: do they have anything to say during speech? Journal of Communication Disorders 39, 381-390.

MacNeilage, P. (2008) The Origin of Speech. Oxford University Press.

MacNeilage, P.F. and Davis, B.L. (2000) On the origin of internal structure of word forms. Science288, 527-531.

Parr. L.A. et al. (2007) Classifying chimpanzee facial expressions using muscle action. Emotion 7, 172-181.

Pearson, K.G. (2000) Neural adaptation in the generation of rhythmic behaviour. Annual Review of Physiology 62, 723-753.

Redican, W.K. and Rosenblum, L.A. (1975) Facial expressions in nonhuman primates. Stanford Research Institute.

Titze, I. R. (1989) Physiologic and acoustic differences between male and female voices. The Journal of the Acoustical Society of America 85, 1699-1707.

Traxler, M.J. et al. (2012) What's special about human language? The contents of the "Narrow Language Faculty" revisited. Linguistics and Language Compass 6, 611-621.

van Wassenhove, V. (2013) Speech through ears and eyes: interfacing the senses with the supramodal brain. Frontiers in Psychology 4, article 388.

Weusthoff, S. et al. (2013) The siren song of vocal fundamental frequency for romantic relationships. Frontiers in Psychology 4, article 439.

Willemet, R. (2013) Reconsidering the evolution of brain, cognition, and behaviour in birds and mammals. Frontiers in Psychology, 4, article 396.

Putting things into words

The dolphin calf is barely three weeks old.

His mother nuzzles at him, calling softly. The calf responds, mimicking her call.

This unique set of sounds is his signature; the name that mother is teaching him to recognise, and which she will use to call to her calf as she teaches her baby to hunt. Later he will use this signature whistle so that others in his own pod will recognise him.

Again and again as his mother calls this name, he repeats it back.

Words hold ideas in code. As in all communications, the meaning of a signal must be agreed between the sender and receiver. We give our words their meaning by shared agreement.

A bonobo (Pan paniscus) ‘fishing’ for termites using a stick tool, at San Diego Zoo (Image: Wikimedia Commons)

As we remember and recall them, we access the information they hold. Collectively we use our words as tools to store information in symbolic form, and so bring our memories ‘to mind’.

Human languages, whether sung or spoken, produce words by controlling the pitch and articulation of these distinct sets of sounds with the lips and tongue. We process our words in the brain through the same fine motor control circuits as we (and our primate cousins) use to coordinate our hands and fingers.

This means that we use words almost as if they are tools in our hands. At the neurological level, words are gestures to which we give meaning, and then use as tools to share that meaning.

Why did our ancestors need words?

Hearing words bring our ideas to mind, coordinating the thoughts of our social group. New words are coined when that group agrees to associate a syllable sequence (a word), itself distinct from existing words, with a new unique meaning. Some other animals, e.g. dogs, can learn to associate human word sounds or gestures with simple meanings.

Dogs (Canis familiaris) have lived alongside humans for over 33,000 years. We have selectively bred these animals to shepherd, hunt, sniff out and retrieve our targets for us upon demand. Human-directed selection has �... more��evolved’ working dogs that can be trained to recognise around 200 human words. Commands, or proxies for them such as whistled signals, are tools use to coordinate their activity with ours (Image: Wikimedia Commons)

However our understanding and use of words – as symbolic tools – is highly flexible. Our application of words is often playful, for example puns and ambiguities can extend the meaning of a word, or apply it in a new way. This furthers our use of these tools for social interaction.

Perhaps starting around 2.5 Ma, our ancestors began to experience selective forces that ultimately promoted a remarkable mental flexibility, resulting in the development of elaborate and multi-purpose manual tools. This expanded tool use corresponds with the onset of cultural learning. The making and development of tools is learned from our social group, as is our speech.

Are we then unique in our ability to coin new words? Dolphins and some other whales broadcast ‘signature calls’ when hunting in murky and deep water, enabling them to stay connected with their pod. Vocal self-identifying calls would have provided similar benefits to our hominin ancestors in dense, low visibility forest habitats, and perhaps also across large distances in open grassland habitats. Specific word tools for sharing information, e.g. warning of snakes or poisonous fruit, would enable this group to collectively navigate their world more effectively than they could alone.

We often talk whilst using our hands to make additional gestures, or operate tools. Here those tools are knives, forks and wineglasses (Image: Wikimedia Commons)

As with manual tools, the act of using words provides immediate feedback. Our language may have a gestural basis in the brain, but our vocal-auditory speech mode is much more efficient. Although we often move our hands when we talk, we can speak whilst conducting other manual tasks.

How did our ancestors begin to use words as tools?

– Peter MacNeilage suggests that our language arose directly as vocal speech. Our ancestors’ circumstances may have selected for specific vocal signals, received using their auditory communication channel, whilst their hands were busy with other tasks. This could include hunting with manual tools, foraging or attending to their young.

– William Stokoe and others argue instead that sign came first. Hand gestures use the visual channel as a receiver. They suggest that vocal gestures emerged later, perhaps as a combined visual and auditory signal.

This photograph of the New York Curb Association market (c1916) shows brokers and clients signalling from street to offices using stylised gestures. Similar manual signs have arisen in many open floor stock exchanges ar... moreound the world, where they made it possible to broker rapid ‘face-to-face’ deals across a noisy room (known as the ‘open outcry’ method of trading). Today these manual languages have been largely superseded by the advent of telephones and electronic trading through the 1980’s and 1990’s (Image: Wikimedia Commons)

In practice, we often use manual and vocal channels synchronously, but they don’t mix; we never create words that oblige us to combine hand movements with mouth sounds. Sign languages based on gestures do arise ‘naturally’ (i.e. much like a pidgin language) usually in response to a constraint, such as where deafness is present in some or all of the population, or where other forms of common language are not available within the group. Manual languages arising under such circumstances reveals just how flexible and adaptable our speech function really is.

Before our ancestors could assign meaning to words, however, they had to learn how to copy and reproduce the unique movements of the lips and tongue that each new word requires.

What might those first words have been?

Babbling sounds are learned. Hearing-impaired infants start to babble later, are less rhythmical and use more nasal sounds than babies with normal hearing. Children exposed only to manual sign language also ‘babble’... more with their hands. Language learning robots that hear and interact with adult humans quickly pick out relevant one-syllable words from a stream of randomly generated babble. These initial syllables act as ‘anchors’, allowing the machines to more quickly distinguish new syllables from the human sounds it hears (Image: Wikimedia Commons)

Babies begin to control the rhythmical movements involved with both eating and vocalising as they start to babble, at around 4-6 months. Making these movements involves coordinating the rhythmical nerve outputs of multiple Central Pattern Generator neural circuits.

Central Pattern Generators operate various repetitive functions of the body, including breathing, walking, the rhythmic arm movements that babies often make as they babble, and the baby hand-to-mouth grab reflex.

Babies begin to babble simply by moving their lower jaw at the same time as making noises with the larynx. These sounds are actually explorations of syllables the child has already heard; around half are the simple syllables used in ‘baby talk’.

Learning to make these sounds involves mastering the simplest of our repetitive vocal movements; typically this involves opening and closing the jaw with the tongue in one position (front, central or back) inside the mouth.

To suckle, babies raise the soft palate in the roof of their mouths to close off the nasal cavity. This creates a vacuum in the mouth that enables them to obtain milk from the breast. After swallowing, the infant opens ... morethe soft palate to take a breath through the nose; this often results in an ‘mmm’ sound in the nasal cavity (Image: Wikimedia Commons)

Say ‘Mmmmm’, then ‘ma-ma’… Where do you feel this sound resonating? A suckling child’s murmuring sounds have this same nasal resonance.

Our first vocal sounds as babies show our desire to connect with our parents. This connection is two-way; neural and hormonal responses are triggered in human parents upon hearing the cries of their child.

A baby makes nasal murmuring sounds when its lips are pressed to the breast and its mouth is full. Perhaps as a mother repeats these soothing sounds back to her child, they become a signal that the infant associates with its mother and later mimics to call to her. Selection may have favoured hominins able to connect with their offspring using vocal sounds.

Unlike young chimps who cling to their mothers, human babies need to be carried. A hominin mother soothing her child by voice sounds would be able to put down her baby and forage with both hands.

There is more. Consider walking. Adopting an upright posture provoked a re-structuring of our hominin ancestors’ body plan.

Breathing and swallowing whilst standing up required a re-orientation of the larynx. This organ acts as a valve controlling the out-breath, prevents food entering the trachea, and houses the vocal folds (vocal cords) controlling the pitch and volume of the voice.

The nasal resonant consonants of ‘mama’ are made with the tongue at rest and soft palate open (above). In this position the nasal cavity is continuous with the mouth and throat. To produce ‘dada’, the soft palat... moree elevates (below), closing off the nasal cavity and limiting resonance to the oral chamber (Image: Modified from Wikimedia Commons)

Breathing and eating whilst standing upright also requires that the soft palate (velum) in the roof of the mouth can move up and down, closing off the nasal cavity from the throat when swallowing. Moving the soft palate also changes the size and connection between the resonating chambers in our heads.

‘Ma-ma’ sounds are made with the soft palate in an open position and opening and closing the jaw to articulate the lips together. Closing the soft palate shifts resonance into the mouth, producing ‘pa-pa’ from the same movement.

Most world languages have nasal and oral resonance in their ‘baby talk’ names for ‘mother’ and ‘father’. Peter MacNeilage highlights this as the only case of two contrasting phonetic forms being regularly linked with their opposing meanings. The desire of hominin infants to connect specifically with one or the other parent may have resulted in them producing the first deliberately contrasted sounds.

Could these sounds, perhaps along with sung tones, have been part of our first ‘words’?

How do we apply meanings to these vocal gestures?

Chimpanzees make spontaneous vocal sounds that express emotions such as fear and pleasure, much as we do. They also communicate intentionally, using non-verbal facial gestures e.g. lipsmacks.

We gesture with our faces, hands and voices across all languages and cultures. Human baby babbling is also voluntary, combining sound from the larynx with lipsmack-like movements to create simple syllables.

Mandarin Chinese is a tonal language; words with different meanings are coded into the same syllable using changes in pitch (Image: Wikimedia Commons). Click to hear the four main tones of standard Mandarin, pronounced ... morewith the syllable “ma”.

The initiation of vocal sounds arises from different regions of the brain in humans and other primates. Primate calls arise from emotional centres within the brain (associated with the limbic system), whereas human speech circuits are focussed around the lateral sulcus (Sylvian fissure).

Within the lateral sulcus, a zone of the macaque brain (area ‘F5’) thought to be the equivalent of Broca’s area in humans, houses nerve pathways called ‘mirror neurons’. The mirror circuits are involved with producing and understanding grasping actions associated with obtaining food, decoding others’ facial expressions, and making mouth movements related to eating.

These circuits reveal that neurological routes link hand-and-mouth action commands. Broca’s area in humans is essential for speech, hand gestures and producing fine movements in the fingers.

A female blackbird (Turdus merula) with nest building materials. As well as speaking and manipulating food in a precise way, many animals and birds use their mouths as a tool to manipulate objects. The materials with wh... moreich this blackbird builds her nest are also tools. Using the eating apparatus to perform other tasks is common amongst vertebrates (Image: Wikimedia Commons)

This and other higher brain areas control Central Pattern Generator circuits in the lower brain which coordinate eating movements and voice control. The same circuits that operate grasping gestures with the hands also trigger the moth to open and close in humans and other higher primates (the automatic hand to mouth reflex of babies).

Mirror neuron networks in humans interconnect with the insula and amygdala; components of the limbic system that are involved in emotional responses. Maurizio Gentilucci and colleagues at the University of Parma suggest that mirror neurons which link these components of the emotional brain with higher brain circuits for understanding the intention of food grasping gestures may have enabled our ancestors to associate hand or mouth gestures with an emotional content. Tagging our observations with an emotional response is how we code our own vocal and other gestures with meaning.

Pronunciation chart for Toli Pona, a simple constructed language, designed by Toronto-based language Sonja Lang. Toli Pona has 14 main sounds (phonemes) and around 120 root words, and was designed as a means to express ... moremaximal meaning with minimal complexity.Each human language uses a unique selection of sounds from the syllables which are possible to make using our vocal apparatus. As our children learn their first words, they replicate the spoken sounds they hear. In this way, the sounds we learn as part of our first languages are specific to our location and circumstances (our environment), and reproduce local nuances in pronunciation. As we become fluent in our native language, producing these sounds becomes ‘automatic’. We are rarely conscious of the syllables we choose, focussing instead on what we want to say.People learning a foreign language as adults tend to speak that language using the sounds repertoire of their native tongue.Listen to the same panphonic prhsase repeated in a variety of accents, (and to contribute your own if you wish), visit the speech accent archive here (Image: Wikimedia Commons)

Many primates vocalise upon discovering food. Gestures then may be a bridge linking body movement to objects and associated vocal sounds. Hearing but not seeing an event take place allows the hearer to visually construct an idea of the associated experience in their mind. Once an uttered sound could trigger an associated memory, our hominin ancestors could then revisit that experience.

When we hear or think of words that describe an object or a movement, the same mirror neuron circuits are activated as when we encounter that object or make the movement. Thinking of the words for walking or dancing also triggers responses in our mirror neuron network that are involved with walking or dancing movements. When we think of doing something, and then do it, we are literally ‘walking our talk’.

Conclusions

- Words are tools produced by unique sets of movements in the vocal apparatus. They may have developed in our hominin ancestors as a sound-based form of gesture.

Young chimpanzees from the Jane Goodall sanctuary of Tchimpounga (Congo Brazzaville). Wild chimpanzees (Pan troglodytes) make ‘pant hoot’ calls upon finding food, such as a tree laden with fruit. These calls are rec... moreognisable by other members of their group. Adjacent groups of wild chimps with overlapping territories adjust and re-model their pant hoot calls so that their group call signature is distinctive from that of the other tribe. These remodelled calls seem to indicate group learning amongst these animals (Image: Wikimedia Commons)

- Studying how our babies learn to speak gives us some insights into how hominins may have made the transition to talking. Our ancestors’ first word tools may have been parental summoning calls. The vocal calls of babies assist them to bond strongly with their parents.

- Words inside the brain replicate our physical experience of the phenomena that they symbolise.

- The mental flexibility to agree new sound combinations and associate these with meaning provided our hominin ancestors with a powerful resource of vocal tools that allow us to share our learning. This ability to share learning has many potentially selectable survival advantages.

Text copyright © 2015 Mags Leighton. All rights reserved.

References

Davis, B.L. and MacNeilage, P.F. (1995) Reconsidering the evolution of brain, cognition, and behaviour in birds and mammals. Journal of Speech, Language, and Hearing Research 38, 1199-1211.

Eisen, A. et al. (2013) Tools and talk: an evolutionary perspective on the functional deficits associated with amyotrophic lateral sclerosis. Muscle and Nerve 49, 469-477.

Falk, D. (2004) Prelinguistic evolution in early hominins: whence motherese? Behavioural and Brain Sciences 27, 491-541.

Gentilucci, M. and Dalla Volta, R. (2008) Spoken language and arm gestures are controlled by the same motor control system. Quarterly Journal of Experimental Psychology 61, 944-957.

Gentilucci, M. et al. (2008) When the hands speak. Journal of Physiology-Paris 102, 21-30.

Goldman, H.I. (2001) Parental reports of “mama” sounds in infants: an exploratory study. Journal of Child Language 28, 497-506.

Jakobson, R. (1960) Why “Mama” and “Papa”?’ In Essays in Honor of Heinz Werner (R. Jakobson, ed.) pp. 538-545. Mouton.

Johnson-Frey, S.H. (2003) What's so special about human tool use? Neuron 39, 201-204.

Johnson-Frey, S.H. (2004) The neural bases of complex tool use in humans. Trends in Cognitive Sciences 8, 71-78.

Jürgens, U. (2002) Neural pathways underlying vocal control. Neuroscience and Biobehavioural Reviews 26, 235–258.

King, S.L. and Janik, V.M. (2013) Bottlenose dolphins can use learned vocal labels to address each other. Proceedings of the National Academy of Sciences, USA 110, 13216-13221.

King, S. et al. (2013) Vocal copying of individually distinctive signature whistles in bottlenose dolphins. Proceedings of the Royal Society of London, B 280, 20130053.

Lieberman. P. (2006) Toward an Evolutionary Biology of Language. Harvard University Press.

Lyon, C. et al. (2012) Interactive language learning by robots: the transition from babbling to word forms. PLoS ONE 7, e38236.

MacNeilage, P. (2008) The Origin of Speech. Oxford University Press.

MacNeilage, P.F. and Davis, B.L. (2000) On the origin of internal structure of word forms. Science288, 527-531.

MacNeilage, P.F. et al. (2000) The motor core of speech: a comparison of serial organization patterns in infants and languages. Child Development 71, 153–163.

MacNeilage, P.F. et al. (1999) Origin of serial output complexity in speech. Psychological Science 10, 459-460.

Matyear, C.L. et al. (1998) Nasalization of vowels in nasal environments in babbling: evidence for frame dominance. Phonetica 55, 1-17.

Mitani, J.C. et al. (1992) Dialects in wild chimpanzees? American Journal of Primatology 27, 233-243.

Petito, L.A. and Marentette, P. (1991) Babbling in the manual mode: evidence for the ontogeny of language. Science 251, 1483-1496.

Savage-Rumbaugh, E.S. (1993) Language comprehension in ape and child. Monographs of the Society for Research into Child Development 58, 1-222.

Stokoe, W.C. (2001) Language in hand: Why sign came before speech. Gallaudet University Press.

Tomasello, M. (1999) The Human Adaptation for Culture. Annual Review of Anthropology 28, 509-529.

Gut feelings; are microbes in your intestines dictating your mood?

A cat hears a scuffling sound amongst the garbage. She stands in shadow, all senses poised.

A few moments pass; the rat emerges. In this alleyway, a light breeze carries her scent towards him. He sniffs the air, and looks in her direction. Two eyes blink, and then fixate upon him.

But he does not heed the warnings. A parasite in his brain, picked up from cat faeces, has immobilised his fear response. He scuttles out across the floor…

We are mostly bacteria. The microbes living on our skin, on the inner surfaces of our lung linings and through our gut outnumber our body cells ten to one. In fact, bacteria and other micro-organisms live in and around all multicellular life forms – from plants to people. This ‘microbiome’ is

an essential part of our biology and our health, and even influences how we (and all other animals) behave.

Microbes begin to colonise our gut from the moment of our birth. They help to pre-digest our food, making nutrients soluble and hence available for absorption through the gut wall. But the helpfulness of these bacteria doesn’t stop here.

The many small fatty acids and amino acids produced by bacterial fermentation act as signals inside our bodies. They initiate the development of the network of nerves in the gut wall; our ‘enteric nervous system’ (also known as our ‘second brain’). These nerves control the rhythmical contractions of the digestive canal, which operate independently of the central nervous system. Bacterial products also initiate, nourish and maintain the cells lining our gut.

A thin section of the small intestine wall, stained for CK20 protein (found in the mucosal lining).The lining of our intestines is a vast sensory surface, whose cells are nourished by fatty acids, vitamins and other com... morepounds produced by bacterial fermentation. The finger-like projections (called villi) visible in this section, form our main absorptive surface for nutrients, and sense the presence of both friendly bacteria and harmful pathogens (Image: Wikimedia Commons)

However the role of this microbial community goes beyond digestion.

– They out-compete harmful bacteria, ‘policing’ the gut and maintaining its pH.

– They present antigen signals (bacterial surface proteins) to our immune system, training us to recognise ‘friend’ from ‘foe’.

– They produce neurotransmitters and hormones, which are used directly and indirectly by the body. These include the cytokines and chemokines needed by immune cells to induce inflammation and fever responses and to target white blood cells into infected tissues. Bacteria produce precursors for 95% of our body’s serotonin (the ‘feel good’ brain chemical), and 50% of our dopamine.

Bacterial products affect the formation of connections between neurons (the synapses).

The enteric nervous system interacts with the central nervous system via the vagus nerve (the parasympathetic system) and the prevertebral ganglia (sympathetic nervous system). However if these nerve connections are sev... moreered, the enteric system will continue to function, integrating and resolving signals from the body and the environment. This network uses around 30 neurotransmitters, most of which are also found in the brain, and which include acetylcholine, dopamine and serotonin (Image: Wikimedia Commons)

Our ‘gut-brain axis’ is linked indirectly by chemicals the bacteria produce, which activate the immune system. Our mind and gut is also linked directly via the vagus nerve.

The vagus or ‘wandering’ nerve, our 10th cranial nerve, forms part of the parasympathetic (involuntary) nervous system. It is a ‘mixed nerve’, meaning it carries both sensory information about our body state back to the brain, including from the gut to the brain stem, and relaying messages from the brainstem and emotional centres to the body.

This information highway operates the ‘vagal reflex’, which relaxes the muscles around the stomach wall making space for our food, and also integrates the digestive process with our blood circulation, hormone system and emotional state.

This conversation between the digestive, immune, hormonal and nervous systems is essential for our healthy development. Mice raised under sterile conditions (so that their guts are free of bacteria) develop fewer connections between neurons, which results in retarded brain growth.

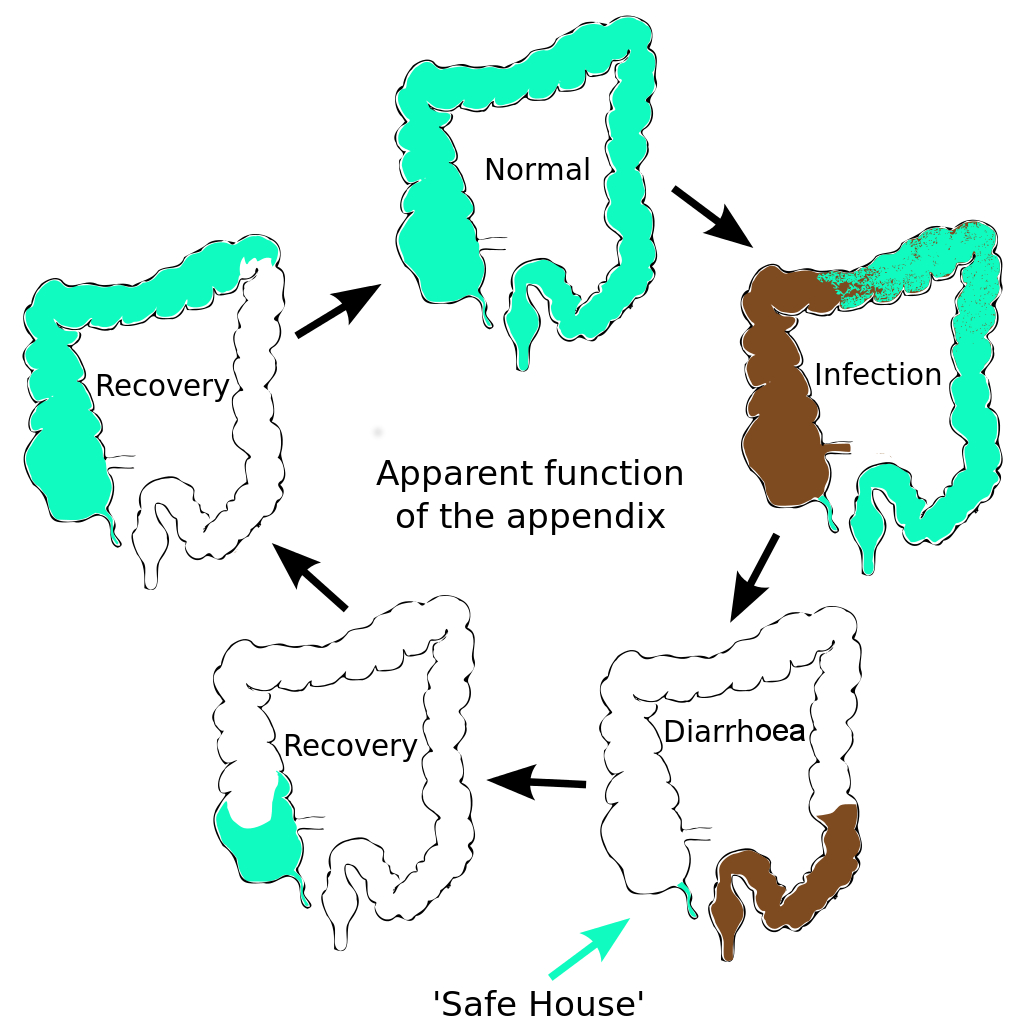

The human appendix is surrounded by copious amounts of immune tissue which particularly nurture and protect this sub-sample of our gut bacterial community. This creates a ‘safe house’ for a sample of our gut microfl... moreora. After an infection has triggered a diarrhoea response which expels the intestinal contents, the guts are recolonized by bacteria from this reservoir in the appendix. Charles Darwin first suggested that the human appendix is a relic of our evolution; a ‘vestige’ of a much larger mammalian caecum. (A caecum is a larger area of gut, containing bacteria, adapted in herbivores to digest large amounts of plant material). However this explanation doesn’t fit the facts. The appendix has evolved at least twice, arising independently in marsupials and placental mammals; an example of evolutionary convergence. This suggests that it has a current and selectable function (Image: Amended from Wikimedia Commons)

Gut microbes also influence our mood and emotions, affecting our behaviour. If intestinal bacteria from timid mice are used to populate the guts of a normally inquisitive mouse strain, these more adventurous mice become timid. Likewise, timid mice become adventurous when given the microflora from inquisitive mice.

Behavioural effects are also visible in humans. One of the first studies, conducted in France by Michaël Messaoudi and co-workers, found that drinking milk fermented with probiotic bacteria (versus non-fermented milk) lifted the mood of healthy human volunteers, reduced their blood cortisol levels (a hormone which increases during stress) and gave them a greater resilience against stimuli provoking symptoms of depression and anxiety.

Just as gut bacteria influence our health and mood, experiences that change our mood and behaviour in turn affect the composition of this microbial community. This shows that our gut microbiota are not autonomous, but act like a fully integrated body organ. This microbiotic organ monitors and responds to the food we eat, influences how we respond to our world, and connects with our body using messages sent in a common chemical ‘language’ . We speak about our ‘gut feelings’, usually unaware that this is much more than a metaphor.

It seems then, that our thoughts, feelings and behavioural choices affect our gut microbiome. As we ‘tell them’ how we feel (through the parasympathetic nervous system, immune system and hormones), they align their metabolic responses with this information. Their biochemical cues reinforce the body’s signal by releasing neurologically active chemicals that affect our mood. If our enteric nervous system really does deserve its title of the ‘second brain’, then the bacteria in our gut are mediating a connection that integrates the ‘thoughts of our guts’ with those of our mind.

Lab mouse, strain mg 3204. Laboratory mice are usually derived from the house mouse (Mus musculus). Mice are useful as a system to study many aspects of human health, thanks to having a high similarity with our genetic ... morecode, as well as the ability to thrive in a human-influenced environment. As with humans, many aspects of their development and behaviour are dependent upon a healthy community of gut microflora (Image: Wikimedia Commons)

Since we and all other animals are intimately associated with our gut bacterial ‘organ’, this raises interesting questions at the cellular level about where our physical boundaries really are.

Text copyright © 2015 Mags Leighton. All rights reserved.

References

Collins, S.M. and Bercik, P. (2013) Intestinal bacteria influence brain activity in healthy humans. Nature Reviews Gastroenterology & Herpetology 10, 326-327.

Cryan, J.F. and Dinan, T.G. (2012) Mind-altering micro-organisms; the impact of the gut microbiota on brain and behaviour. Nature Reviews Neuroscience 13, 701-712.

David, L.A. et al. (2014) Diet rapidly and reproducibly alters the gut microbiome. Nature 505, 559-566.

Diamond, B. et al. (2011) It takes guts to grow a brain. Bioessays 33, 588-591.

Faith, J.J. et al. (2011) Predicting a human gut microbiota's response to diet in gnotobiotic mice. Science 333, 101-104.

Forsythe, P. et al. (2010) Mood and gut feelings. Brain, Behaviour and Immunity 24, 9-16.

Frank, D.N. and Pace, N.R. (2008) Gastrointestinal microbiology enters the metagenomics era. Current Opinion in Gastroenterology 24, 4-10.

Heijtz, R.D. et al. (2011) Normal gut microbiota modulates brain development and behaviour. Proceedings of the National Academy of Sciences, USA 108, 3047–3052.

Jahan-Mihan, A. (2011) Dietary proteins as determinants of metabolic and physiologic functions of the gastrointestinal tract. Nutrients 3, 574-603.

Kurokawa. K. et al. (2007) Comparative metagenomics reveals commonly enriched gene sets in human gut microbiomes. DNA Research 14, 169-181.

Lee, W.J. and Brey, P.T. (2013) How microbes influence metazoan development: insights from history and Drosophila modelling of gut-microbe interactions. Annual Review of Cell and Developmental Biology 29, 571-592.

Lee, W.J. and Hase, K. (2014) Gut microbiota-generated metabolites in animal health and disease. Nature Chemical Biology 10, 416-424.

Lyer, L.M. et al. (2004) Evolution of cell-cell signalling in animals: did late horizontal gene transfer from bacteria have a role? Trends in Genetics 20, 292-299.

Messaoudi, M.et al. (2011) Assessment of psychotropic-like properties of a probiotic formulation (Lactobacillus helveticus R0052 and Bifidobacterium longum R0175) in rats and human subjects. British Journal of Nutrition 105,755-764.

Montiel-Castro, A.J. et al. (2013) The microbiota-gut-brain axis: neurobehavioural correlates, health and sociality. Frontiers in Integrative Neuroscience 7, article 70.

O’Hara, A.M. and Shanahan, F. (2006) The gut flora as a forgotten organ. EMBO Reports 7, 688-693.

Randal Bollinger, R. et al. (2007) Biofilms in the large bowel suggest an apparent function of the human vermiform appendix. Journal of Theoretical Biology 249, 826-831.

What’s so different about human speech?

Upon leaving the island, Odysseus is warned that storms lie ahead. His route home to Ithaca passes the sirens; monsters whose beautiful, haunting voices lure sailors to their deaths.

He sets a course and explains his intention to the crew. At his command they fasten him to the mast, seal their ears with wax, and prepare for their encounter.

They reach treacherous waters. The siren song reaches into Odysseus’ mind, resonating with his deepest longings. The storm rages within. He struggles, but his bindings, the result of his clear intention, secure him tightly to the mast.

Forced to stand still and listen, he finds that he starts to hear the voices for what they really are; the empty fears of his own soul. He relinquishes his fight and hears the voice at his own still centre. The storm calms.

The crew notice that he has returned to his senses. They cut him loose.

He is indeed a wise and worthy captain.

This ancient footprint, first made in soft mud, is an index which shows us the passing of an three-toed Theropod dinosaur. Denver, Colorado (Image: Wikimedia Commons)

Speaking involves transmitting and interpreting intentional signs, some of which are also used in the instinctual communications of animals. These signals are of three kinds: 1. An index physically shows the presence of something, e.g. wolves tracking their prey by scent.

2. An ‘icon’ resembles the thing it stands for, like a photograph or a painting. Dolphins, apes and elephants recognise their own reflection; we assume that they interpret this two-dimensional image as representing their three-dimensional physical selves.

3. A symbol associates an unrelated form with a meaning. Our words are symbols, linking an idea with unique sound-and-movement sequences. They do not resemble the things they represent.

The ‘Union Jack’, a symbol of Great Britain since the union of Great Britain and Ireland in 1801. It is made up of three other flag symbols; the Cross of St George for England (insert, top), St Andrew’s Saltaire f... moreor Scotland (centre), and for Northern Ireland, St Patricks Saltire (below) (Image: Wikimedia Commons)

Symbolism is almost unknown amongst animals, with a few rare exceptions. A stereotypical form of symbol is the ‘waggle dance’ of honeybees.

Although chimpanzees can be taught to use some sign gestures, they do not naturally communicate using symbols. In contrast, we use our symbolic language intentionally.

Our uses of speech are unique. We revisit our memories, order our thoughts and make future plans. With a destination in mind, we can listen for our ‘inner voice’, map out our route, take a stand against the storm of inner and outer distractions, and find our way home.

How is our speech unique?

Normal speech is already multi-channel; our words are accompanied by the musicality of our speaking, and our facial expressions and other physical gestures transmit many layers and levels of complex meaning. Writing is ... moreanother mode of communicating our language. Social media transmits our language into virtual worlds. The online social networking service Facebook commissioned ‘Facebook Man’ to commemorate their 150 millionth user(Image: Wikimedia Commons)

Aspects of our language ability are found in other animals, but the way we have combined and developed these traits is uniquely human.

1. We use any available channel.

Most human languages use vocal speech. Under circumstances where speaking is not possible, we find other ways, e.g. sign languages and Morse code.

2. We build our words from parts that gain meaning as they are combined

Most of the syllables we use to build words lack meaning on their own. Combining them together (as in English) or adding tonal shifts (as in Chinese) creates words.

3. We code our words with meanings, making them into symbols

The chimpanzee (Pan troglodytes) known as Washoe (1965-2007) was the first non-human animal to be taught American sign language. She lived from birth with a human family, and was taught around 350 sign words. It was rep... moreorted that upon seeing a swan, Washoe signed “water” and “bird”. Chimpanzees are capable of learning simple symbols. However Washoe did not make the transition to combining these symbols together into new meanings (Image: Wikimedia commons)

Symbols are ‘displaced’, i.e. they do not need to resemble the thing they represent. Our words symbolise ideas, experiences and things.

4. We combine these symbols to make new meanings.

We build words into phrases and stories, use these to revisit and share our memories, combine them into new forms, and communicate this information to others in various ways. Combining different symbols brings us a new understanding, which changes how we respond.

Look at this painting. As you do, consider what feelings it provokes.

‘Wheatfield with crows’ by Vincent Van Gogh, 1890. (Image; wikimedia commons)

It is, of course, by Vincent Van Gogh. As you may know, his choices of colour and subject material were a personal symbolic code. He often used vibrant yellows, considering this colour to represent happiness.

His doctor noted that during his many attacks of epilepsy, anxiety and depression, Van Gogh tried to poison himself by swallowing paint and other substances.

As a consequence, he may have ingested significant amounts of toxic ‘chrome yellow’, which contains lead(II) chromate (PbCrO4).

Now consider this statement.

“This is the last picture that Van Gogh painted before he killed himself” (John Berger 1972, p28)

Look again at the picture.

What do you feel this time?

‘Wheatfield with crows’ by Vincent Van Gogh, 1890. (Image; wikimedia commons)

Certainly our response has changed, though it is difficult to articulate precisely what is different. The image now seems to illustrate this sentence. Its symbolic content has altered for us. This example shows how combining two types of information –an image and text- can change the meaning it symbolises.

Some animals can be trained to recognise simple symbols. The psychologist Irene Pepperberg taught her African Grey parrot ‘Alex’ to count; he learned to use numbers as symbols, and could identify quantities of up to 6 items.

An African Grey Parrot (Psittacus erithacus). Professor Irene Pepperberg’s parrot, Alex, learned basic grammar, could identify objects by name, and could count (Image: Wikimedia Commons)

5. The order in which we combine symbols defines their meaning

We put word symbols together into phrases, sentences, descriptions, sayings, stories, poems, documents, manuals, plays, oaths, promises, parodies, plays, pantomimes….

The ordering of words follow rules (grammar and syntax). Animals such as dogs and dolphins show some form of syntactical ability, but there is no evidence that they are on the verge of using what we understand as language. The order of words shows us their relationship, allowing us to understand how they are interacting. We change the order of our words and phrases to change the meaning we wish to communicate.

For instance; this makes sense.

‘Jane asked Simon to give these flowers to you.’

This doesn’t quite fit our normal understanding of reality…

‘These flowers asked Simon to give Jane to you.’

This works, but the meaning has changed.

‘Simon asked you to give these flowers to Jane’

However grammar is not enough . The words in combination need to ‘make sense’ for us to understand the meaning the speaker wishes to communicate.

What does this enable us to say?

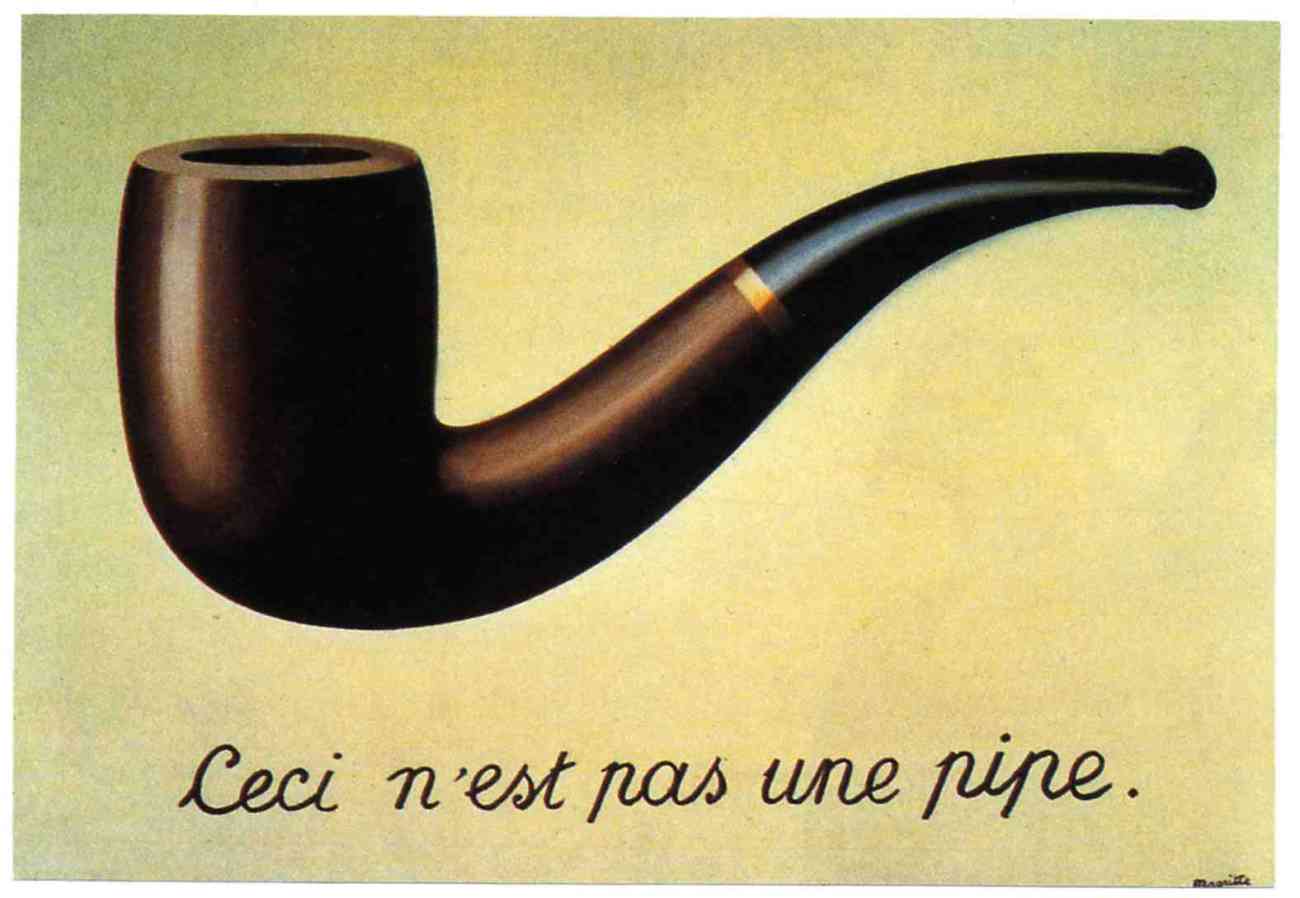

‘The treachery of images’ by Belgian surrealist painter, Rene Magritte (1928-9).Much of Magritte’s work explored the combination of words and images, and the way that this challenges the meaning that we understand... more from the components on their own. This combination of words and image have been deliberately chosen so that they contradict each other.What the artist says is true. However, it isn’t a pipe! It is a two-dimensional representation of a pipe (Image: Wikimedia Commons)

When we make new combinations of words, or add words to a visual signal such as a gesture, we create a new meaning.

We can add adjectives to a description, add qualifiers, combine phrases into a sentence, and make statements one after the other so that our listener associates these ideas. This process is known as ‘recursion’, a linguistic term borrowed from mathematics.

Our ideas about time vary between cultures, but we all mentally ‘time travel’ by revisiting our memories. For instance, the scent of something can evoke a memory that transports us back into an earlier event; suddenly we experience again the emotions and sensations we felt at that time. Putting our current selves into the past memory, or imagining a future scenario and inserting ourselves into that story, is a form of recursion.

Memory allows us to link speaking and listening with the meanings of our words. Our language is well structured to easily express recursive ideas. This shows us that our thinking uses recursion.

Why are we able to do this?

An illustration by Randolph Caldecott (1887) for ‘the House that Jack Built’. This traditional British nursery rhyme uses recursion to build up a cumulative tale. The sentence is expanded by adding to one end (end r... moreecursion). Each addition adds an increasingly emphatic meaning to the final item of the sentence (i.e. the house that Jack built) (Image: Wikimedia Commons). One final version of combined phrases ends like this;This is the horse and the hound and the hornThat belonged to the farmer sowing his cornThat kept the cock that crowed in the mornThat woke the priest all shaven and shornThat married the man all tattered and tornThat kissed the maiden all forlornThat milked the cow with the crumpled hornThat tossed the dog that worried the catThat killed the rat that ate the maltThat lay in the house that Jack built.

Our thinking capacity, through which we learn and remember, means that we can copy and learn to use language. Although some brain regions appear specialised for roles in memory and language, our ‘language function’ uses our entire brain, and cannot be dissociated from our minds.

Our ‘language brain’ includes the ‘basal ganglia’; these are neurons which connect the outer cortex and thalamus with lower brain regions.

We need this connectedness to coordinate movements in our fingers, to understand the relationships between words that are inferred by their order in our phrases, and to solve abstracted (theoretical) problems. This network interacts with ‘mirror neurons’ which allow us to relate to and decode the posture, speech and emotional cues of others.

The basal ganglia that influence our speech also regulate the muscles controlling our posture. Standing is therefore more than just balancing on two legs; it is a whole body activity and requires much finer muscle control than walking on all fours. It also frees the hands, which allows us to manipulate tools. Lieberman suggests that it is the fine motor control required to maintain our upright posture which pre-adapted our ancestors for manipulating hand tools as well as the tongue, lips and other structures that make speech possible. This upright posture is linked with a remodelling of our breathing apparatus, giving us more control over our larynx.

Philip Lieberman’s work with people suffering from Parkinson’s’ disease suggests that it is the ability to remember that makes speaking possible. Parkinson’s patients have degraded nerve circuits in their basal ganglia, so these patients have short term memory problems and difficulties with balancing and making precise finger movements. They also struggle with understanding and using metaphors and longer word sequences. This suggests that when we speak we are using the circuitry for sorting and remembering movement sequences, irrespective of whether these are producing words or actions.

Our posture has remodelled the evolution of our entire physiology from breathing to childbirth. It frees the hands, allowing us to perform delicate and precise sequences of tasks. Selection for the ability to precisely ... moresequence our manual motor skills may have provided our ancestors the means to better sequence their thoughts (Image: Wikimedia Commons)

The basal ganglia that influence our speech also regulate the muscles controlling our posture. Standing is therefore more than just balancing on two legs; it is a whole body activity and requires finer muscle control that walking on all fours. It also frees the hands, which allows us to manipulate tools.

Lieberman suggests that it is the fine motor control required to maintain our upright posture which pre-adapted our ancestors for manipulating hand tools as well as the tongue, lips and other structures that make speech possible. This upright posture is linked with a remodelling of our breathing apparatus, giving us more control over our larynx.

The nerve networks that control our limbs and voices are linked across all vertebrates. Our basic ‘walking instinct’ initially activates Central Pattern Generator circuits driving movement in all four limbs. These are the same neural outputs that control our lips, tongue and throat.

Conclusions: What does this say about our language?

Captain Odysseus stands upright against the mast. This posture is distinct to our species, and has many implications for our speech, language and other actions

(Image: Wikimedia commons)

- Our hominin ancestors evolved to use symbolic words and stories as a code to store and share memories, develop new skills and ideas, and coordinate their intentions and actions with their tribe.

- When we revisit our memories or ‘reword’ our experiences into new sequences, we remodel the past, and project our thoughts into the future.

- The control we have over our vocal sounds is linked with our neural circuits for movement. The ability to balance ideas and manipulate our tongues is linked to our ability to stand upright, balance on two feet and manipulate tools with our hands.

- Language, then, is a cultural tool that allows us to order our thoughts, go beyond our instincts, share our intentions, and choose our own story.

Text copyright © 2015 Mags Leighton. All rights reserved.

References

Berger J (1972) ‘Ways of Seeing’ Penguin books Ltd, London, UK

BickertonD and Szathmáry E (2011) ‘Confrontational scavenging as a possible source for language and cooperation’ BMC Evolutionary Biology 11:261 doi:10.1186/1471-2148-11-261

Corballis MC (2007) ‘The uniqueness of human recursive thinking’ American Scientist Volume 95 (3), May 2007, Pages 240-248

Corballis, M.C.(2007) ‘Recursion, language, and starlings’ Cognitive Science 31(4) 697-704

Everett D (2008) ‘Don’t sleep, there are snakes: Life and language in the Amazonian jungle’ Pantheon Books, New York, NY (2008)

Everett, D (2012) ‘Language: the cultural tool’ Profile Books Ltd, London, UK

Gentner TQ et al (2006) ‘Recursive syntactic pattern learning by song birds’ Nature, 440;1204–1207

Riddles in code; is there a gene for language?

‘I have…’

Words are like genes; on their own they are not very powerful. But apply them with others in the right phrase, at the right time and with the right emphasis, and they can change everything.

‘I have a dream…’

Genes are coded information. They are like the words of a language, and can be combined into a story which tells us who we are.

The stories we choose to tell are powerful; they can change who we become, and also change the people with whom we share them.

‘I have a dream today!’

Language is a means for coding and passing on information, but it is cultural, and definitely non-genetic. Nevertheless, for our speech capacity to have evolved, our ancestors must have had a body equipped to make speech sounds, along with the mental capacity to generate and process this language ‘behaviour’. Our body’s development is orchestrated through the actions of relevant genes. If the physical aspects of language ultimately have a genetic basis, this implies that speech must derive, at least in part, from the actions of our genes.

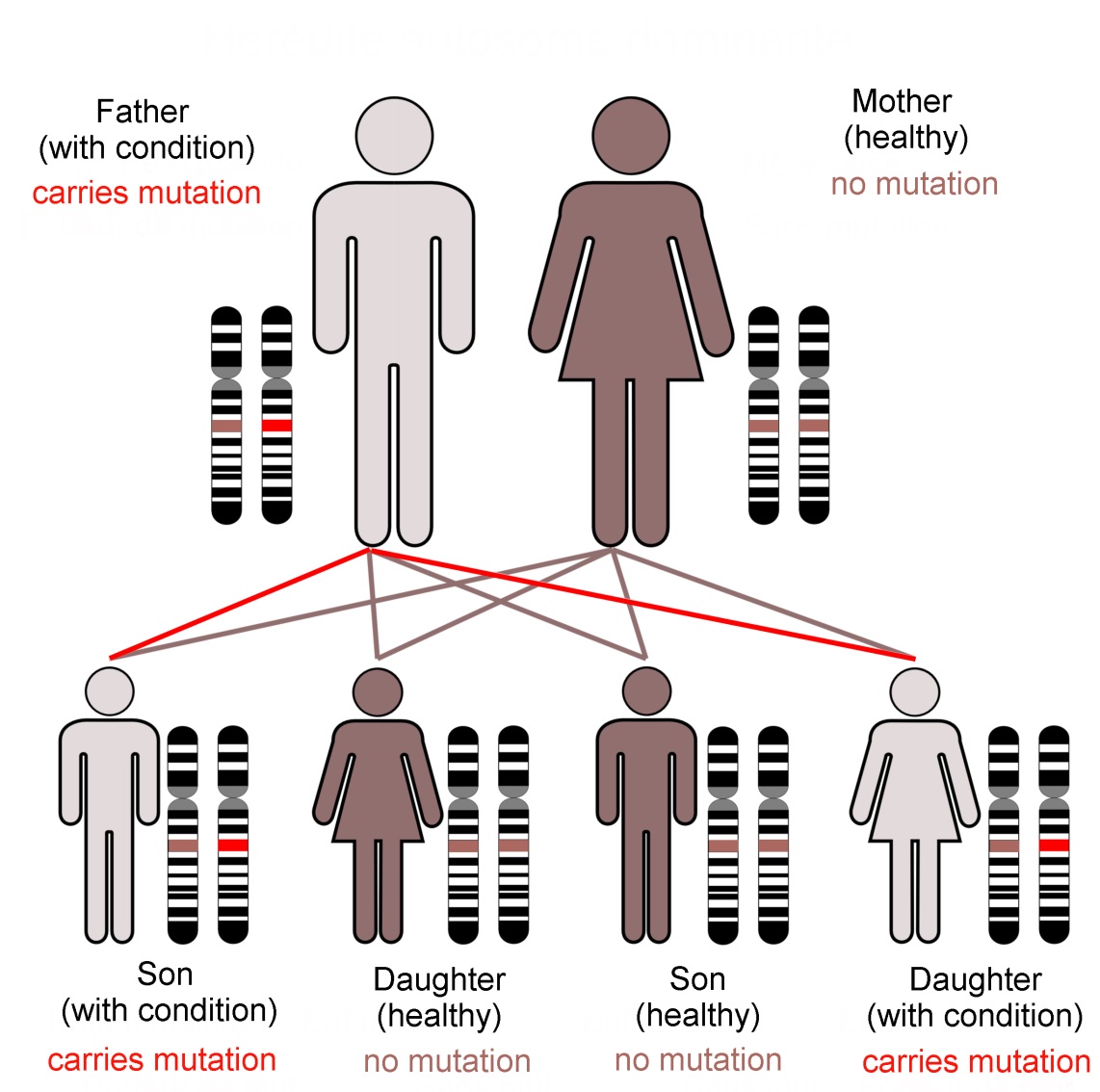

The hunt for genes involved with language led researchers at the University of Oxford to investigate an extended family (known as family KE). Some family members had problems with their speech. The pattern of their symptoms suggested that they inherited these difficulties as a ‘dominant’ character, and through a single gene locus.

The FOXP2 gene encodes for the ‘Forkhead-Box Protein-2’; a transcription factor. This is a type of protein that interacts with DNA (shown here as a pair of brown spiral ladders), and influences which genes are turne... mored on in the cell, and which remain silent. This diagram shows two Forkhead box proteins, which associate with each other when active. This bends the DNA strand and makes critical areas of the genetic code more accessible (Image: Wikimedia Commons)

Discovery of another unrelated patient with the same symptoms confirmed that the condition was linked to a gene known as FOXP2 (short for ‘Forkhead Box Protein-2’). This locus encodes a ‘transcription factor’; a protein that influences the activation of many other genes. FOXP2 was subsequently dubbed ‘the gene for language’. Is that correct?

Not really. FOXP2 affects a range of processes, not just speech. The mutation which inactivates the gene causes difficulties in controlling muscles of the face and tongue, problems with compiling words into sentences, and a reduced understanding of language. Neuroimaging studies showed that these patients have reduced nerve activity in the basal ganglia region of the brain. Their symptoms are similar to some of the problems seen in patients with debilitating diseases such as Parkinson’s and Broca’s Aphasia; these conditions also show impairment of the basal ganglia.

Genes code for proteins by using a 3-letter alphabet of adenine, thymine, guanine and cytosine (abbreviated to A, T, G and C). These nucletodes are knwn as ‘bases’ (are alkaline in solution) and make matched pairs w... morehich form the ‘rungs of the ladder’ of the DNA helix. Substituting one base for another (as happens in many mutations) can change the amino acid sequence of the protein a gene encodes. Changes may make no impact on survival, allowing the DNA sequence to alter over time. Changes that affect critical sections of the protein (e.g. an enzyme’s active site), or critical proteins like FOXP2, are rare (Image: Wikimedia Commons)

Genes provide the code to build proteins. Proteins are assembled from this coding template (the famous triplets) as a sequence of amino acids, strung together initially like the carriages of a train and then folded into their finished form. The amino acid sequences of the FOXP2 protein show very few differences across all vertebrate groups. This strong conservation of sequence suggests that this protein fulfils critical roles for these organisms. In mice, chimpanzees and birds, FOXP2 has been shown to be required for the healthy development of the brain and lungs. Reduced levels of the protein affect motor skills learning in mice and vocal imitation in song birds.

The human and chimpanzee forms of FOXP2 protein differ by only two amino acids. We also share one of these changes with bats. Not only that, but there is only one amino acid difference between FOXP2 from chimpanzees and mice. These differences might look trivial but they are probably significant. FOXP2 has evolved faster in bats than any other mammal, hinting at a possible role for this protein in echolocation.

Mouse brain slice, showing neurons from the somatosensory cortex (20X magnification) producing green fluorescent protein (GFP). Projections (dendrites) extend upwards towards the pial surface from the teardrop-shaped ce... morell bodies. Humanised Foxp2 in mice causes longer dendrites to form on specific brain nerve cells, lengthens the recovery time needed by some neurons after firing, and increases the readiness of these neurons to make new connections with other nerves (synaptic plasticity). The degree of synaptic plasticity indicates how efficiently neurons code and process information (Image: Wikimedia Commons)

Changing the form of mouse FOXP2 to include these two human-associated amino acids alters the pitch of these animals’ ultrasonic calls, and affects their degree of inquisitive behaviour. Differences also appear in their neural anatomy. Altering the number of working copies (the genetic ‘dose’) of FOXP2 in mice and birds affects the development of their basal ganglia.

Mice with ‘humanised’ FOXP2 protein show changes in their cortico-basal ganglia circuits along with altered exploratory behaviour and reduced levels of dopamine (a neurotransmitter that affects our emotional responses). So too, human patients with damage to the basal ganglia show reduced levels of initiative and motivation for tasks.

This suggests that FOXP2 is part of a general mechanism that affects our thinking, particularly around our initiative and mental flexibility. These are critical components of human creativity, and are as it happens, essential for our speech.

Basal ganglia circuits process and organise signals from other parts of the brain into sequences. Speaking involves coordinating a complex sequence of muscle actions in the mouth and throat, and synchronising these with the out-breath. We use these same muscles and anatomical structures to breathe, chew and swallow; our ability to coordinate them affects our speech, although this is not their primary role.

Family KE’s condition, caused by a dominant mutation in the FOXP2 gene, follows an autosomal (not sex-linked) pattern of inheritance, as shown here.Dominant mutations are visible when only one gene copy is present. In... more contrast a recessive trait is not seen in the organism unless both chromosomes of the pair carry the mutant form of the gene. The FOXP2 transcription factor protein is required in precise amounts for normal function of the brain. The loss of one working FOXP2 gene copy reduces this ‘dose’ which is enough to cause the problems that emerged as family KE’s symptoms (Image: Annotated from Wikimedia Commons)

In practice, very few of our 25,000 genes are individually responsible for noticeable characteristics. Most genetically inherited diseases result from the effects of multiple gene loci. FOXP2 is unusual because of its ‘dominant’ genetic character. It does not give us our language abilities, but it is involved in the neural basis of our mental flexibility and agility at controlling the muscles of our mouths, throats and fingers.

In addition, genes are only part of the story of our development. The way we think and subsequently behave alters our emotional state. Feeling stressed or calm affects which circuits are active in our brain. This alters the biochemical state of body organs and tissues, particularly of the immune system, modifying which genes they are using.

The dance between the code stored in our genes and the consequences of our thoughts builds us into what we are mentally, physically and socially. This story is ours to tell. By our experience, and with this genetic vocabulary, we create what we become.

Text copyright © 2015 Mags Leighton. All rights reserved.

References

Chial H (2008) ‘Rare genetic disorders: Learning about genetic disease through gene mapping, SNPs, and microarray data’ Nature Education 1(1):192 http://www.nature.com/scitable/topicpage/rare-genetic-disorders-learning-about-genetic-disease-979

Clovis YM et al. (2012) ‘Convergent repression of Foxp2 3′UTR by miR-9 and miR-132 in embryonic mouse neocortex: implications for radial migration of neurons’ Development 139, 3332-3342.

Enard, W (2011) ‘FOXP2 and the role of cortico-basal ganglia circuits in speech and language evolution’ Current Opinion in Neurobiology 21; 415–424

Enard, W et al (2009) A Humanized Version of Foxp2 Affects Cortico-Basal Ganglia Circuits in Mice Cell 137 (5); 961–971 http://www.sciencedirect.com/science/article/pii/S009286740900378X

Feuk L et at., Absence of a Paternally Inherited FOXP2 Gene in Developmental Verbal Dyspraxia, in The American Journal of Human Genetics, Vol. 79 November 2006, p.965-72.

Fisher SE and Scharff C (2009) ‘FOXP2 as a molecular window into speech and language’ Trends in Genetics 25 (4); 166-177

Lieberman P (2009) ‘FOXP2 and Human Cognition’ Cell 137; 800-803

Marcus GF & Fisher SE (2003) ‘FOXp2 in focus; what can genes tell us about speech and language?’ Trends in Cognitive Sciences 7(6); 257-262

Reimers-Kipping S et al. (2011) ‘Humanised Foxp2 specifically affects cortico-basal ganglia circuits’ Neuroscience 175; 75-84

Scharff C & Haesler S (2005) ‘An evolutionary perspective on Foxp2; strictly for the birds?’ Current opinion in Neurobiology 15:694-703

Vargha-Khadem F et al. (2005) ‘FOXP2 and the neuroanatomy of speech and language’ Nature Reviews Neuroscience 6, 131-138 http://www.nature.com/nrn/journal/v6/n2/full/nrn1605.html

Wapshott N (2013) ‘Martin Luther King's 'I Have A Dream' Speech Changed The World’ Huffington post, 28th August 2013 http://www.huffingtonpost.com/2013/08/28/i-have-a-dream-speech-world_n_3830409.html

Webb DM & Zhang J (2005) ‘Foxp2 in song learning birds and vocal learning mammals’ Journal of Heredity 96(3);212-216

Upon reflection; what can we really see in mirror neurons?

“Mirror, mirror on the wall,

who is the fairest of them all?”

“Fair as pretty, right or true;

what means this word ‘fair’ to you?

Fair in manner, moods and ways,

fair as beauty ‘neath a gaze…

Meaning is a given thing.

I cannot my opinion bring

to validate your plain reflection!

You must make your own inspection.”

Mirrors shift our perspective, enabling us to see ourselves directly, and reflect ideas back to us symbolically. But how do we really see ourselves?

Quite recently, neuroscientists discovered a new type of nerve cell in the brains of macaques which form a network across the primary motor cortex, the brain region controlling body movements. These nerves are intriguing; they become active not only when the monkey makes purposeful movements such as grasping food, but also when watching others do the same. As a result, these cells were named ‘mirror neurons’.

In monkeys and other non-human primates, these nerves fire only in response to movements with an obvious ‘goal’ such as grabbing food. In contrast, our mirror network is active when we observe any human movement, whether it is purposeful or not. Our brains ‘mirror’ the actions of ourselves and others’ actions, from speaking to dancing.

However it is to interpret what function these cells are performing. Different researchers suggest that mirror neurons enable us to:

– assign meaning to actions;

– copy and store information in our short term memory (allowing us to learn gestures including speech);

– read other people’s emotions (empathy);

– be aware of ourselves relative to others (giving us a ‘theory of mind’, i.e. we have a mind, and the contents of other people’s minds are similar to our own).

Whilst these opinions are not necessarily exclusive, they do seem to reflect the different priorities of these experts. Their varied interpretations highlight how difficult it is to be aware of how our beliefs and assumptions affect what our observations can and cannot tell us.

What we can say, is that the behaviour of these nerve cells shows that our mirror neuron responses are very different from those of our closest relatives, the primates.

What do we know about mirror neurons from animals?

A tribe of stump tail macaques (Macaca arctoides) watch their alpha male eating.When these macaques observe a meaningful gesture, such as grabbing for food, this triggers a shift in the electrical status of the same mot... moreor neurons in their brain as the observed animal is using to perform the action. This ‘mirroring’ is found in other primates, including humans.(Image: Wikimedia commons)

Researchers at the University of Parma first discovered mirror neurons in an area of the macaque brain which is equivalent to Broca’s area in humans. This brain region assembles actions into ordered sequences, e.g. operating a tool or arranging our words into a phrase. Later studies show these neurons connect right across the monkey motor cortex, and respond to many intentional movements including facial gestures.

The macaque mirror system is activated when they watch other monkeys seize and crack open some nuts, grab some nuts for themselves, or even if they just hear the sound of this happening. Their neurons make no responses to ‘pantomime’ (i.e. a grabbing action made without food present), casual movements, or vocal calls.

Song-learning birds also have mirror-like neurons in the motor control areas in their brains. Male swamp sparrows’ mirror network becomes active when they hear and repeat their mating call. Their complex song is learned by imitating other calls, suggesting a possible role for mirror neurons in learning. This is tantalising, as we do not yet know the extent to which mirror neurons are present in other animals.

![Our visual cortex receives information from the eyes, which is then relayed around the mirror network and mapped onto the motor output to the muscles. First, [purple] the upper temporal cortex (1) receives visual information and assembles a visual description which is sent to parietal mirror neurons (2). These compile an in-body description of the movement, and relay it to the lower frontal cortex (3) which associates the movement with a goal. With these observations complete, inner imitation (red) of the movement is now possible. Information is sent back to the temporal cortex (4) and mapped onto centres in the motor cortex which control body movement (5) (Image: Annotated from Wikimedia Commons)](http://www.42evolution.org/wp-content/uploads/2014/07/G-Mirror-reflex-Carr-etal-2003.jpg)

Our visual cortex receives information from the eyes, which is then relayed around the mirror network to the motor output to the muscles. First, [purple] the upper temporal cortex (1) receives visual information and ass... moreembles a visual description go the action. This is sent to parietal mirror neurons (2) which compile an in-body description of the movement, and relay it to the lower frontal cortex (3) where the movement becomes associated with a goal. With these observations complete, an inner imitation (red) of the movement is now possible. Information is sent back to the temporal cortex (4) and mapped onto centres in the motor cortex which control body movement (5) (Image: Annotated from Wikimedia Commons)

The primate research team at Parma suggest the mirror system’s role is in action recognition, i.e. tagging ‘meaning’ to deliberate and purposeful gestures by activating an ‘in-body’ experience of the observed gesture. The mirror network runs across the sensori-motor cortex of the brain, ‘mapping’ the gesture movement onto the brain areas that would operate the muscles needed to make the same movement.