Upon reflection; what can we really see in mirror neurons?

“Mirror, mirror on the wall,

who is the fairest of them all?”

“Fair as pretty, right or true;

what means this word ‘fair’ to you?

Fair in manner, moods and ways,

fair as beauty ‘neath a gaze…

Meaning is a given thing.

I cannot my opinion bring

to validate your plain reflection!

You must make your own inspection.”

Mirrors shift our perspective, enabling us to see ourselves directly, and reflect ideas back to us symbolically. But how do we really see ourselves?

Quite recently, neuroscientists discovered a new type of nerve cell in the brains of macaques which form a network across the primary motor cortex, the brain region controlling body movements. These nerves are intriguing; they become active not only when the monkey makes purposeful movements such as grasping food, but also when watching others do the same. As a result, these cells were named ‘mirror neurons’.

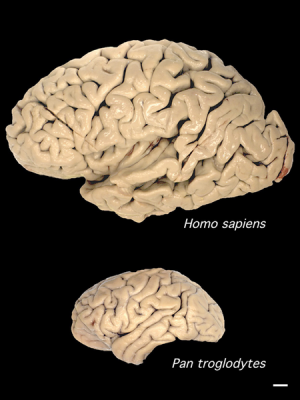

In monkeys and other non-human primates, these nerves fire only in response to movements with an obvious ‘goal’ such as grabbing food. In contrast, our mirror network is active when we observe any human movement, whether it is purposeful or not. Our brains ‘mirror’ the actions of ourselves and others’ actions, from speaking to dancing.

However it is to interpret what function these cells are performing. Different researchers suggest that mirror neurons enable us to:

– assign meaning to actions;

– copy and store information in our short term memory (allowing us to learn gestures including speech);

– read other people’s emotions (empathy);

– be aware of ourselves relative to others (giving us a ‘theory of mind’, i.e. we have a mind, and the contents of other people’s minds are similar to our own).

Whilst these opinions are not necessarily exclusive, they do seem to reflect the different priorities of these experts. Their varied interpretations highlight how difficult it is to be aware of how our beliefs and assumptions affect what our observations can and cannot tell us.

What we can say, is that the behaviour of these nerve cells shows that our mirror neuron responses are very different from those of our closest relatives, the primates.

What do we know about mirror neurons from animals?

A tribe of stump tail macaques (Macaca arctoides) watch their alpha male eating.When these macaques observe a meaningful gesture, such as grabbing for food, this triggers a shift in the electrical status of the same mot... moreor neurons in their brain as the observed animal is using to perform the action. This ‘mirroring’ is found in other primates, including humans.(Image: Wikimedia commons)

Researchers at the University of Parma first discovered mirror neurons in an area of the macaque brain which is equivalent to Broca’s area in humans. This brain region assembles actions into ordered sequences, e.g. operating a tool or arranging our words into a phrase. Later studies show these neurons connect right across the monkey motor cortex, and respond to many intentional movements including facial gestures.

The macaque mirror system is activated when they watch other monkeys seize and crack open some nuts, grab some nuts for themselves, or even if they just hear the sound of this happening. Their neurons make no responses to ‘pantomime’ (i.e. a grabbing action made without food present), casual movements, or vocal calls.

Song-learning birds also have mirror-like neurons in the motor control areas in their brains. Male swamp sparrows’ mirror network becomes active when they hear and repeat their mating call. Their complex song is learned by imitating other calls, suggesting a possible role for mirror neurons in learning. This is tantalising, as we do not yet know the extent to which mirror neurons are present in other animals.

![Our visual cortex receives information from the eyes, which is then relayed around the mirror network and mapped onto the motor output to the muscles. First, [purple] the upper temporal cortex (1) receives visual information and assembles a visual description which is sent to parietal mirror neurons (2). These compile an in-body description of the movement, and relay it to the lower frontal cortex (3) which associates the movement with a goal. With these observations complete, inner imitation (red) of the movement is now possible. Information is sent back to the temporal cortex (4) and mapped onto centres in the motor cortex which control body movement (5) (Image: Annotated from Wikimedia Commons)](http://www.42evolution.org/wp-content/uploads/2014/07/G-Mirror-reflex-Carr-etal-2003.jpg)

Our visual cortex receives information from the eyes, which is then relayed around the mirror network to the motor output to the muscles. First, [purple] the upper temporal cortex (1) receives visual information and ass... moreembles a visual description go the action. This is sent to parietal mirror neurons (2) which compile an in-body description of the movement, and relay it to the lower frontal cortex (3) where the movement becomes associated with a goal. With these observations complete, an inner imitation (red) of the movement is now possible. Information is sent back to the temporal cortex (4) and mapped onto centres in the motor cortex which control body movement (5) (Image: Annotated from Wikimedia Commons)

The primate research team at Parma suggest the mirror system’s role is in action recognition, i.e. tagging ‘meaning’ to deliberate and purposeful gestures by activating an ‘in-body’ experience of the observed gesture. The mirror network runs across the sensori-motor cortex of the brain, ‘mapping’ the gesture movement onto the brain areas that would operate the muscles needed to make the same movement.

An alternative interpretation is that mirror neurons allow us to understand the intention of another’s action. However as monkey mirror neurons are not triggered by mimed gestures, the intention of the observed action presumably must be assessed at a higher brain centre before activating the mirror network.

How is the human mirror system different?

Watching another human or animal grabbing some food creates a similar active neural circuit in our mirror network.

The difference is that our nerves are activated by us observing any kind of movement. Unlike monkeys, when we see a mimed movement, we can infer what this gesture means. Even when we stay still we cannot avoid communicating; the emotional content of our posture is readable by others. In particular we readily imitate other’s facial expressions.

As we return a smile, our face ‘gestures’. Marco Iacoboni and co-workers have shown that as this happens, our mirror system activates along with our insula and amygdala. This shows that our mirror neurons connect with the limbic system which handles our emotional responses and memories. This suggests that emotion (empathy) is part of our reading of others’ actions. As we see someone smile and smile back, we feel what they feel.

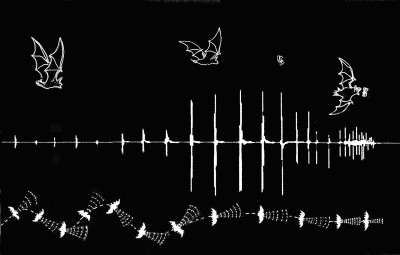

Spoken words deliver more articulated information than can be resolved by hearing alone. Our ability to read and copy the movements of others as they speak may be how we really distinguish and understand these sounds. This ‘motor theory of speech perception’ is an old idea. The discovery of mirror-like responses provides physical evidence of our ability to relate to other people’s movements, suggesting a possible mechanism for this hypothesis.

Sound recording traces of the words ‘nutshell’, ‘chew’ and ‘adagio’. Our speech typically produces over 15 phonemes a second. Our vowels and consonants ‘overlap in time’, and blur together into composite... more sounds. This means that simply hearing spoken sounds does not provide us with enough information to distinguish words and syllables. In practice we decode words from this sound stream, along with emotional information transmitted through the tone and timbre of phrases, facial expressions and posture (Images: Wikimedia Commons)

Further studies suggest that these mirror neurons are part of a brain-wide network made of various cell types. Alongside the mirror cells are so-called ‘canonical neurons’, which fire only when we move. In addition, ‘anti-mirrors’ activate only when observing others’ movements. Brain imaging techniques show that frontal and parietal brain regions (beyond the ‘classic’ mirror network) are also active during action imitation. It is not clear how the system operates, but in combination we relate to others’ actions through the same nerve and muscle circuits we would use to make the observed movements. We relate in this way to what is happening in someone else’s mind.

Are mirror neurons our mechanism of language in the brain?

Japanese macaques (Macaca fuscata) grooming in the Jigokudani Hotspring in Nagano Prefecture, Japan. Human and monkey vocal sounds arise from different regions of the brain. Primate calls are mostly involuntary, and exp... moreress emotion. They are processed by inner brain structures, whereas the human speech circuits are located on the outer cortex (Image: Wikimedia Commons)

Mirror-like neurons activate whether we are dancing or speaking. Patients with brain damage that disrupts these circuits have difficulties understanding all types of observed movements, including speech. This suggests that we use our extended mirror network to understand complex social cues.

Our mirror neuron responses to words map onto the same brain circuits as other primates use for gestures. However signals producing our speech and monkey vocal calls arise from different brain areas. This suggests that our speech sounds are coded in the brain not as ‘calls’ but as ‘vocal gestures’. This highlights the possible origins of speaking as a form of ‘vocal grooming’, which socially bonded the tribe.

When we think of or hear words, our mirror network activates the sensory, motor and emotional areas of the brain. We thus embody what we think and say. Michael Corballis and others consider that mirror neurons are part of the means by which we have evolved to understand words and melodic sounds as ‘gestures’.

Woman Grasping Fruit’ by Abraham Brueghel, 1669; Louvre, Paris. The precision control of the grasping gesture she uses to pluck a fig from the fruit bowl is unique to humans. The intensity of her expression implies ma... moreny layers of meaning to what we understand from this picture (Image: Wikimedia Commons)

What is unclear is how we put meaning into these words. Some researchers have suggested that mirror neurons anchor our understanding of a word into sensory information and emotions related to our physical experience of its meaning. This would predict that our ‘grasp’ of the meaning of our experiences arises from our bodily interactions with the world.

Vocal gestures would have provided our ancestors with an expanded repertoire of movements to encode with this embodied understanding. Selection could then have elaborated these gestures to include visual, melodic, rhythmical and emotional information, giving us a route to the symbolic coding of our modern multi-modal speech.

We produce different patterns of mirror neuron activity in relation to different vowel and consonant sounds, as well as to different sound combinations. Also, the same mirror neuron patterns appear when we watch someone moving their hands, feet and mouth, or when we read word phrases that mention these movements.

Wilder Penfield used the ‘homunculus’ or ‘little man’ of European folklore to produce his classic diagram of the body as being mapped onto the brain. A version of this is shown here; mirror neurons map incoming ... moreinformation onto the somatosensory cortex (shown left) and outputs to the muscles from the motor cortex (right). These brain regions lie adjacent to each other (Image: Wikimedia Commons)

We process word sequences in higher brain centres at the same time as lower brain circuits coordinate the movements required for speech production and non-verbal cues. Greg Hicock suggests that our speech function operates by integrating these different levels of thinking into the same multi-modal gesture.

Mirror neurons connecting the brain cortex and limbic system may allow us to synchronously process our understanding of an experience with our emotional responses to it. This allows us to consciously control our behaviour, adapt flexibly to our world, and communicate our understanding to others and to ourselves.

Smoke and mirrors; what do these nerves really show and tell?

People floating in the Dead Sea. Our ability to read emotional information from postures means that we can intuit information about people’s emotional state even when they are not visibly moving (Image: Wikimedia Comm... moreons)

The word ‘mirror’ conjures up strong images in our minds. This choice of name may have influenced what we are looking for in our data on mirror neurons. However they appear crucial for language. This and other evidence suggests that our ability to speak and to read meaning into movement is a property of our whole brain and body.

Single nerve measurements show that the mirror neuron network is a population of individual cells with distinct firing thresholds. Different subsets of these neurons are active when we see similar movements made for different purposes. This suggests that the network responds flexibly to our experience.

Cecilia Heyes’ research shows that our mirror network is a dynamic population of cells, modified by the sensory stimulus our brain receives throughout life. She suggests that these mirror cells are ‘normal’ neurons that have been ‘recruited’ to mirroring, i.e. adopted for a specialised role; to correlate our experience of observing and performing the same action.

This gives us a possible evolutionary route for the appearance of these mirror neurons. Recruitment of brain motor cortex cells to networks used for learning by imitation would create a population of mirror cells. This predicts that;

i. Mirror-like networks will be found in animals which learn complex behaviour patterns, such as whales. (They are already known in songbirds.)

One of the many dogs Ivan Pavlov used in his experiments (possibly Baikal); Pavlov Museum, Ryazan, Russia. Note the saliva catching container and tube surgically implanted in the dog’s muzzle. These dogs were regu... morelarly fed straight after hearing a bell ring. In time, the sound of the bell alone made them salivate in anticipation of food. This experience had trained them to code the bell sound with a symbolic meaning, i.e. to indicate the imminent arrival of food (Image: Wikimedia Commons)

ii. It should be possible to generate a mirror-like network in other animals by training them to associate a stimulus with a meaning, perhaps a symbolic meaning as in Pavlov’s famous ‘conditioned reflex’ experiments with dogs.

Mirror neurons then, show us that something unusual is going on in our brain. They reveal that we use all of our senses to relate physically to movement and emotion in others, and to understand our world. They are part of the system we use to learn and imitate words and actions, communicate through language, and interact with our world as an embodied activity.

However beyond this, we cannot yet see what else they reveal. Until we do, our conclusions about these neurons must remain ‘as dim reflections in a mirror’.

Conclusions

- Monkey mirror neurons relate the observations of intentional movements to a sense of meaning.

- The human mirror network activates in response to all types of human movements, including the largely ‘hidden’ movements of our vocal apparatus when we speak.

Double rainbow. The second rainbow results from a double reflection of sunlight inside the raindrops; the raindrops act like a mirror as well as a prism. The colours of this extra bow are in reverse order to the prima... morery bow, and the unlit sky between the bows is called Alexander’s band, after Alexander of Aphrodisias who first described it. (Image: Wikimedia commons)

- These neurons are a component of the neural network that allows us to internally code meaning into our words, and ‘embody’ our memory of the idea they symbolise.

- The mirror network neurons seem to be part of an expanded empathy mechanism that connects higher and lower brain areas, allowing us to understand our diverse experiences from objects to ideas.

- These cells are recruited into the mechanism by which we learn symbolic associations between items (such as words and their meaning). This shows that it is our thinking process, rather than the cells of our brain, that makes us uniquely human.

Text copyright © 2015 Mags Leighton. All rights reserved.

References

Aboitiz, F & García V R (1997) The evolutionary origin of the language areas in the human brain. A neuroanatomical perspective Brain Research Reviews 25(3);381-396. doi: 10.1016/S0165-0173(97)00053-2

Aboitiz, F et al. (2005) Imitation and memory in language origins’ Neural Networks 18(10);1357.. doi: 10.1016/j.neunet.2005.04.009

Arbib, M (2005) ‘The mirror system hypothesis; how did protolanguage evolve?’ Ch 2 (p21-47 ) in Language Origins - Tallerman M (ed), Oxford University Press, Oxford.

Arbib, M A (2005) ‘From monkey-like action recognition to human language; an evolutionary framework for neurolinguistics.’ The behavioural and Brain Sciences 2: 105-124

Aziz-Zadeh L et al (2006) Congruent Embodied Representations for Visually Presented Actions and Linguistic Phrases Describing Actions’ Current Biology 16(18); 1818-1823

Braadbaart, L (2014) ‘The shared neural basis of empathy and facial imitation accuracy’ NeuroImage 84; 367 – 375

Bradbury J (2005) ‘Molecular Insights into Human Brain Evolution’. PLoS Biology 3/3/2005, e50 doi:10.1371/journal.pbio.003005

Carr, L.et al. (2003) ‘Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas’ Proceedings of the National Academy of Sciences of the United States of America 100(9); 5497-5502

Catmur, C. et al (2011) ‘Making mirrors: Premotor cortex stimulation enhances mirror and counter-mirror motor facilitation’ (2011) Journal of Cognitive Neuroscience, 23 (9), pp. 2352-2362. doi: 10.1162/jocn.2010.21590 http://www.mitpressjournals.org/doi/pdf/10.1162/jocn.2010.21590

Catmur C et al. (2007) ‘Sensorimotor Learning Configures the Human Mirror System’ Current Biology 17(17) 1527-1531 http://www.sciencedirect.com/science/journal/09609822

Corballis MC (2002) ‘From Hand to Mouth: The Origins of Language’ Princeton University Press, Princeton, NJ, USA

Corballis MC (2003) ‘From mouth to hand: Gesture, speech, and the evolution of right-handedness’ Behavioral and Brain Sciences 26(2); 199-208

Corballis, M (2010) ‘Mirror neurons and the evolution of language’ Brain and Language 112(1); 25-35 doi: 10.1016/j.bandl.2009.02.002

Corballis, M.C. (2012) ‘How language evolved from manual gestures’ Gesture 12(2); PP. 200 – 226

Ferrari PF et al. (2003) ‘Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex’ European Journal of Neuroscience 17 (8); 1703–1714

Ferrari, P.F. et al (2006) ‘Neonatal imitation in rhesus macaques’ PLoS Biology, 4 (9), pp. 1501-1508 doi: 10.1371/journal.pbio.0040302 http://biology.plosjournals.org/archive/1545-7885/4/9/pdf/10.1371_journal.pbio.0040302-L.pdf Galantucci, B et al (2006) ‘The motor theory of speech perception reviewed’ Psychon Bull Rev. 2006 June; 13(3): 361–377. PMCID: PMC2746041 NIHMSID: NIHMS136489 http://www.ncbi.nlm.nih.gov/pmc/articles/PMC2746041/

Gallesse V et al (1996) ‘Action recognition in the premotor cortex’ Brain 119:593–609

Gentilucci M & Corballis MC (2006) ‘From manual gesture to speech: A gradual transition’ Neuroscience & Biobehavioral Reviews 30(7); 949-960

Hage, S.R., Jürgens, U. (2006) Localization of a vocal pattern generator in the pontine brainstem of the squirrel monkey European Journal of Neuroscience 23(3); 840 – 844 doi: 10.1111/j.1460-9568.2006.04595.x

Heyes C (2010) ‘Where do mirror neurons come from?’ Neuroscience and behavioural Reviews 34(4); 575-583 http://www.sciencedirect.com/science/article/pii/S0149763409001730

Heyes CM (2001) ‘Causes and consequences of imitation’ Trends in Cognitive Sciences 5; 245–261

Hickok G (2012) ‘Computational neuroanatomy of speech production’ Nature Reviews Neuroscience 13, 135-145 doi:10.1038/nrn3158

Jürgens, U (2003) From mouth to mouth and hand to hand: On language evolution Behavioral and Brain Sciences 26(2); 229-230

Kemmerer D and Gonzalezs-Castillo J (2008) ‘The Two-Level Theory of verb meaning: An approach to integrating the semantics of action with the mirror neuron system’ Brain Lang. 112(1);54-76 doi: 10.1016/j.bandl.2008.09.010. Epub 2008 Nov 8.

Keysers C & Gazzola V (2009) ‘Expanding the mirror: vicarious activity for actions, emotions, and sensations’ Curr Opin Neurobiol. 2009 Dec;19(6):666-71. doi: 10.1016/j.conb.2009.10.006. Epub 2009 Oct 31. Review.

Kohler E et al (2002) Hearing Sounds, Understanding Actions: Action Representation in Mirror Neurons Science297(5582);. 846-848 DOI: 10.1126/science.1070311

Molenberghs P et al (2012) ‘Activation patterns during action observation are modulated by context in mirror system areas’ NeuroImage59(1); 608–615

Molenberghs P et al. (2012) ‘Brain regions with mirror properties: A meta-analysis of 125 human fMRI studies’ Neuroscience & Biobehavioral Reviews 36(1); 341-349 http://dx.doi.org/10.1016/j.neubiorev.2011.07.004

Molenberghs P, et al. (2009) Is the mirror neuron system involved in imitation? A short review and meta-analysis. Neurosci Biobehav Rev. 2009 Jul;33(7):975-80. doi: 10.1016/j.neubiorev.2009.03.010. Epub 2009 Apr 1.

Mukamel R et al (2010) Single-neuron responses in humans during execution and observation of actions Current biology 20(8); 750–756 http://dx.doi.org/10.1016/j.cub.2010.02.045

Pohl, A et al. (2013) ‘Positive Facial Affect – An fMRI Study on the Involvement of Insula and Amygdala’ PLoS One 8(8): e69886. doi:10.1371/journal.pone.0069886 PMCID: PMC3749202

Prather, J. F., Peters, S., Nowicki, S., Mooney, R. (2008). "Precise auditory-vocal mirroring in neurons for learned vocal communication." Nature 451: 305-310.

Pulvermüller F (2005) ‘Brain mechansims linking language and action’ Nature Reviews Neuroscience 6:576-582

Pulvermüller F et al (2005) ‘Brain signatures of meaning access in action wordrecognition’ Journal of Cognitive Neuroscience 17;884-892

Pulvermüller F et al (2006) ‘Motor cortex maps articulatory features of speech sounds’ PNAS 103 (20); 7865–7870 doi: 10.1073/pnas.0509989103

Rizzolatti G & Luppino G (2001) ‘The cortical motor system’ Neuron 31:889–901

Rizzolatti G (1996) ‘Premotor cortex and the recognition of motor actions. Cogn. Brain Res. 3:131–41

Pavlov IP (1927) ‘Conditioned Reflexes; an investigation of the physiological activity of the cerebral cortex’ OUP, London (republished 2003 as ‘Conditioned Reflexes’ Dover Publications Ltd, NY, USA)

Rizzolatti G, et al (1996) ‘Localization of grasp representation in humans by PET: 1. Observation versus execution. Exp. Brain Res. 111:246–52

Rizzolatti G, Fogassi L, Gallese V. 2002. ‘Motor and cognitive functions of the ventral premotor Cortex’ Curr. Opin. Neurobiol. 12:149–54

Rizzolatti G. et al (2001) ‘Neurophysiological mechanisms underlying the understanding and imitation of action’ Nature Reviews Neuroscience,2(9);661-670. doi: 10.1038/35090060

Umilta et al (2001) ‘I know what you are doing. A neurophysiological study’ Neuron 31:155-165